|

|

|

| Home | |

| People | |

| Research Lines | |

| Projects | |

| Publications | |

| PhD Theses | |

| Patents | |

| Awards | |

| Stays | |

| Teaching | |

| Activities | |

| Datasets | |

| Links | |

| Admin | |

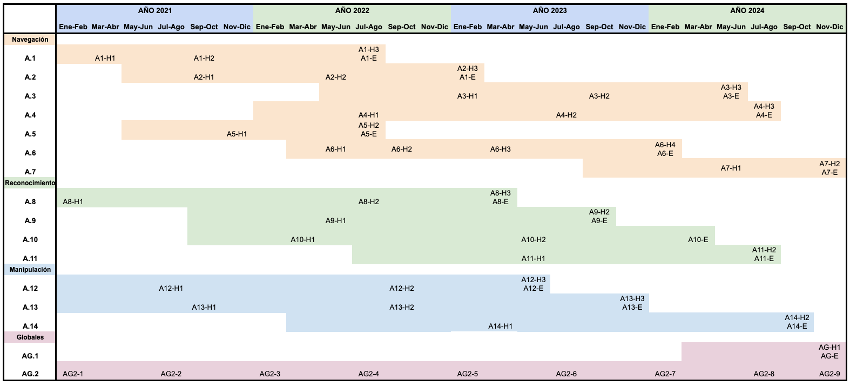

PROMETEO2021

Towards Further Integration of Intelligent Robots in Society: Navigate, Recognize and Manipulate

|

In recent years, the number of robots used to perform tasks autonomously in multiple fields and sectors has gradually increased. Today, we can find robots performing repetitive tasks in controlled environments, addressing complex and sometimes dangerous tasks. However, having robots perform tasks in uncontrolled environments with the presence of objects and moving elements (such as people and other robots) and requiring the need to move between different points in the scene presents notable challenges that need to be addressed to enable greater integration of robots in such scenarios. This research project aims to tackle activities within this scope in three specific lines: navigation, recognition, and manipulation, in order to advance the integration of robots and the performance of tasks in these environments. On one hand, it is necessary to consider the presence of humans in these social environments, as their possible movements and behavior will affect how robots should move and, ultimately, navigate within these scenarios. Additionally, there is a need to advance in the tasks of environment recognition, identifying the scenarios to make the localization of robots within them more robust and precise. Finally, the problem of object manipulation by these robots will be addressed, considering both the flexibility in shape and the deformability of these objects.

Project PROMETEO 075/2021 is funded by the Consellería de Innovación, Universidades, Ciencia y Sociedad Digital de la Generalitat Valenciana

Navigation Line

Recognition Line

Manipulation Line

Global Activities

AG.1 Experimentation and validation tests.

AG.2: Coordination, management and dissemination of results.

|

News

| © Automation, Robotics and Computer Vision Lab. (ARVC) - UMH |